Combined Jewish Philanthropies (CJP), Greater Boston’s Federation, has long played the part of a central convener, grant maker, and thought leader for its 14 Jewish day schools. One of its thought leadership roles is the collection and analysis of school data. Over time, CJP has built a number of methods to collect and analyze the unique set of data that comes out of our schools. In the past two years, we have reevaluated the goals and process of CJP’s day school data collection efforts in order to increase their value and lessen their burden on the schools.

We’ve centered all of our efforts around two use cases for data collection:

Network insight: What data might we collect to push our collective day school agenda forward and create a sustainable ecosystem of schools?

School-specific insight: What data might we collect that would allow for smarter action by school leaders?

Based on our previous methods of data collection, we observed three challenges.

Data Entry is Time Consuming

Given the tremendous pressure school leaders and administrators are under, taking on another tedious responsibility is challenging. Exacerbating the baseline responsibility of data entry is the fact that schools may belong to other associations, such as the Association of Independent Schools of New England (AISNE), that also require them to enter data. Asking our professionals to take a leap of faith and enter data that might pay off for them and our network as a whole is no small ask.

Analysis is Impossible Without Common Definitions

Another challenge data collectors face is making sure they are defining the information they are asking for clearly such that it is understood in the same way by administrators. As just one example, in an effort to allow schools to benchmark themselves, CJP long asked for information on the size of school admission pipelines. The hypothesis was that if the conversion rate of pipeline size to applications received varied by school, it might allow a school to question what it could learn from another school with a higher pipeline to application conversion rate.

The challenge is that the concept of an admission pipeline can differ dramatically between schools. One school might define it as having collected an email address, while another could define it as having had a substantive connection with a prospective family by email or over the phone. Aside from hard measures like gross tuition, net tuition, and enrollment size, the vast majority of data points we previously collected bumped up against this definitional challenge.

Framing Data for Action is Hard

This is perhaps the most critical challenge, and there is no easy solution for it. Even with the data in hand and accurate definitions, the challenge of a central data collector is framing the information in such a way that it comes across as accurate, credible, and clear enough to make the school or the network question its current tactics. On top of those challenges, school heads and administrators possess different levels of comfort with data and have differing capacities to act on it.

Solutions

While we certainly haven’t designed a perfect solution for all of these challenges, here are a few things we’ve started to do in an effort to tackle these challenges.

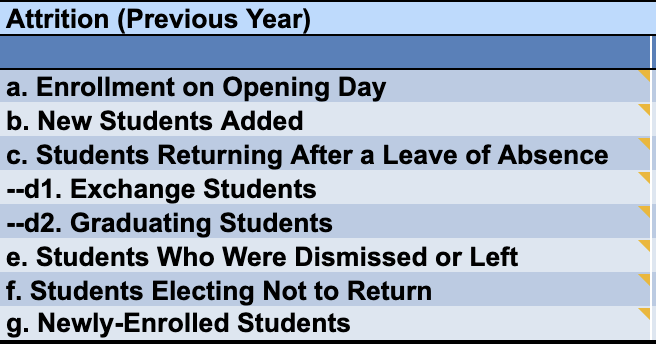

Lean on DASL for Thoughtful Definitions

Through Prizmah’s partnership with the National Association of Independent Schools, our schools gained access to their Data Analysis for School Leadership solution (DASL). One of the advantages of this system is the precision of the data-entry fields and definitions they provide to data enterers. With the help of school experts in all the areas of data collection, they have refined the information they request and defined it carefully.

DASL’s definition of student attrition is a good example of this precision: